Introduction

Apache Spark

Spark is a fast and general engine for large-scale data processing. The user writes a Driver Program containing the script that tells spark what to do with your data, and Spark builds a Directed Acyclic Graph (DAG) to optimize workflow. With a massive dataset, you can process concurrently across multiple machines.

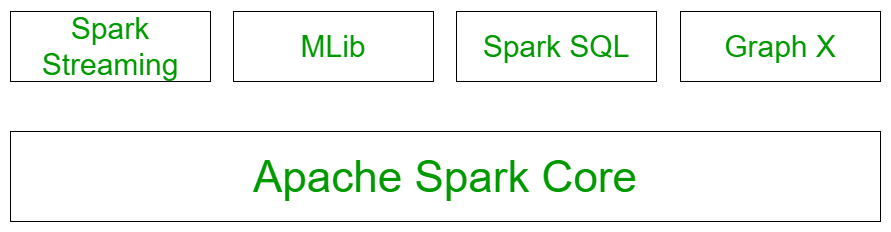

Let's take a second to discuss the components of spark:

- Spark Core - All functionalities are build on this layer (task scheduling, memory management, fault recovery, and storage systems). Also has the API that defines RDDs, which we will discuss later. There are 4 modules on this layer:

- Spark Streaming - Live data streaming of data, such as log files. These APIs are similar to RDD.

- MLib - Scalable machine learning library

- Spark SQL - Library for working with structured data. Supports Hive, parquet, json, csv, etc.

- GraphX - API for graphs and graph parallel execution. Clustering, classification, traversal, searching, and path-finding is possible in the graph. We'll come back to this much later on.

Why Scala?

You can use Spark with many languages; primarily Python, R, Java and Scala. I like Scala because it's functional, type-safe and JVM-friendly langauge. Also, since Spark is written in Scala, there is a slight overhead on running scripts in any other language.

Besides a knowledge of programming, a familiarity with SQL with make Spark very easy to learn.